About this Course

UConn has developed web-based continuing pharmacy education activities to enhance the practice of pharmacists and assist pharmacists in making sound clinical decisions to affect the outcome of anticoagulation therapy for the patients they serve. There are a total of 17.25 hours of CPE credit available. Successful completion of these 17.25 hours (13 activities) or equivalent training will prepare the pharmacist for the Anticoagulation Traineeship, which described below in the Additional Information Box.

The activities below are available separately for $17/hr or as a bundle price of $199 for all 13 activities (17.25 hours). These are the pre-requisites for the anticoagulation traineeship. Any pharmacist who wishes to increase their knowledge of anticoagulation may take any of the programs below.

When you are ready to submit quiz answers, go to the Blue "Take Test/Evaluation" Button.

Target Audience

Pharmacists who are interested in making sound clinical decisions to affect the outcome of anticoagulation therapy for the patients they serve.

This activity is NOT accredited for technicians.

Pharmacist Learning Objectives

At the completion of this activity, the participant will be able to:

- Explain the role of common laboratory tests used in monitoring of anticoagulation therapy.

- Identify an alternative to INR monitoring for warfarin therapy.

- Identify the clinical situations requiring Activated whole blood Clotting Time (ACT) and anti-factor Xa activity monitoring for unfractionated heparin.

- Discuss the technical differences between point of care testing and laboratory testing and the influence on patient care.

Release Date

Released: 07/15/2025

Expires: 07/15/2028

Course Fee

$34

ACPE UAN

ACPE #0009-0000-25-039-H01-P

Session Code

25AC39-TXJ44

Accreditation Hours

2.0 hour of CE

Bundle Options

If desired, “bundle” pricing can be obtained by registering for the activities in groups. It consists of thirteen anticoagulation activities in our online selection.

You may register for individual topics at $17/CE Credit Hour, or for the Entire Anticoagulation Pre-requisite Series.

Pharmacist General Registration for 13 Anticoagulation Pre-requisite activities-(17.25 hours of CE) $199.00

In order to attend the 2-day Anticoagulation Traineeship, you must complete all of the Pre-requisite Series or the equivalent.

Additional Information

Anticoagulation Traineeship at the University of Connecticut Health Center, Farmington, CT

The University of Connecticut School of Pharmacy and The UConn Health Center Outpatient Anticoagulation Clinic have developed 2-day practice-based ACPE certificate continuing education activity for registered pharmacists and nurses who are interested in the clinical management of patients on anticoagulant therapy and/or who are looking to expand their practice to involve patient management of outpatient anticoagulation therapy. This traineeship will provide you with both the clinical and administrative aspects of a pharmacist-managed outpatient anticoagulation clinic. The activity features ample time to individualize your learning experience. A “Certificate of Completion” will be awarded upon successful completion of the traineeship.

Accreditation Statement

The University of Connecticut, School of Pharmacy, is accredited by the Accreditation Council for Pharmacy Education as a provider of continuing pharmacy education. Statements of credit for the online activity ACPE #0009-0000-25-039-H01-P will be awarded when the post test and evaluation have been completed and passed with a 70% or better. Your CE credits will be uploaded to your CPE monitor profile within 2 weeks of completion of the program.

Grant Funding

There is no grant funding for this activity.

Requirements for Successful Completion

To receive CE Credit go to Blue Button labeled "take Test/Evaluation" at the top of the page.

Type in your NABP ID, DOB and the session code for the activity. You were sent the session code in your confirmation email.

Faculty

Caitlin Raimo, PharmD Candidate 2026

UConn School of Pharmacy

Storrs, CT

Jeannette Y. Wick, RPh, MBA, FASCP,

Director, Office of Pharmacy Professional Development

UConn School of Pharmacy

Storrs, CT

Faculty Disclosure

In accordance with the Accreditation Council for Pharmacy Education (ACPE) Criteria for Quality and Interpretive Guidelines, The University of Connecticut School of Pharmacy requires that faculty disclose any relationship that the faculty may have with commercial entities whose products or services may be mentioned in the activity.

Ms. Wick and Ms. Raimo have no relationships with ineligible companies and therefore have nothing to disclose.

Disclaimer

The material presented here does not necessarily reflect the views of The University of Connecticut School of Pharmacy or its co-sponsor affiliates. These materials may discuss uses and dosages for therapeutic products, processes, procedures and inferred diagnoses that have not been approved by the United States Food and Drug Administration. A qualified health care professional should be consulted before using any therapeutic product discussed. All readers and continuing education participants should verify all information and data before treating patients or employing any therapies described in this continuing education activity.

Program Content

INTRODUCTION

This module of the UConn Anticoagulation Certificate Program discusses laboratory monitoring of various anticoagulants. It builds on previous modules and repeats some information. Repetition will help consolidate learning and perhaps stimulate thought.

Coagulation Cascade

To monitor anticoagulation therapy effectively, it’s essential to first understand the coagulation cascade. This process begins with two distinct pathways—the intrinsic and extrinsic pathways—which ultimately converge at a critical step.1 At that step, Factor X is activated to Factor Xa. Factor Xa, along with Factor Va and calcium (Ca²⁺), then catalyzes the conversion of prothrombin (Factor II) into thrombin (Factor IIa). Thrombin plays a pivotal role by converting fibrinogen into fibrin, the key structural protein that crosslinks with platelets to form a stable clot.1

Two of the most widely used laboratory tests for assessing coagulation function are2

- activated partial thromboplastin time (aPTT) – primarily monitors the intrinsic pathway.

- prothrombin time (PT) – primarily monitors the extrinsic pathway.

By evaluating these tests, clinicians can assess both pathways’ functionality, making them valuable tools for screening coagulation disorders. These tests are particularly useful when investigating unexplained bleeding, as they help determine whether one or both pathways are impaired.

Prothrombin Time

PT measures the time it takes for plasma to clot after exposure to a tissue factor reagent. This test assesses both the extrinsic and common coagulation pathways. Laboratory and point-of-care machines detect clots using various methods, such as visual, optical, or electromechanical techniques, depending on the device. A normal PT range typically falls between nine and 13 seconds3; however, this range can vary significantly based on the laboratory equipment and reagents employed. Therefore, it is crucial to verify the normal range for a specific laboratory’s setup regularly to ensure accurate interpretation of results.4,5

PT and INR Standardization

The therapeutic level of vitamin K antagonists (VKA) is measured by PT and international normalized ratio (INR). The INR is a standardized ratio the World Health Organization (WHO) developed in the 1980s specifically for VKA monitoring, as PT varies greatly between laboratories.6 WHO’s goal was to allay the discrepancy in tissue factor (TF) activity between PT reagents to create a common scale that would display PT results consistently. The INR uses data and processes from the International Sensitivity Index (ISI), a WHO project that quantifies analyzers and individual PT reagents’ reactivity. In addition, each laboratory has its own geometric mean PT (MNPT), which laboratory staff calculate using is the average PT calculated from at least 20 normal donors of both sexes, tested on the same local analyzer and under the same test conditions as the patient’s PT. The formula for INR is INR = (patient’s PT/MNPT). A normal INR is usually in the range of 0.8 to 1.2.7,8

Clinical Uses of PT/INR

When patients present with unexplained bleeding, measuring the PT/INR can provide valuable insights. An elevated PT/INR suggests a potential issue within the patient’s coagulation system, indicating that something may be wrong.4,5 Conversely, if the PT is normal, healthcare providers may need to investigate alternative causes for the bleeding, as the coagulation factors may not be the source of the problem. Table 1 describes several important clinical applications for the PT and INR.4,5

| Table 1. Clinical Uses for the Prothrombin Time and International Normalized Ratio4,5 | |

| Warfarin monitoring | PT and INR are crucial for managing patients on warfarin therapy, as warfarin interferes with the synthesis of certain clotting factors, including factor II (prothrombin). |

| General assessment of anticoagulation state | These tests provide a general overview of a patient's anticoagulation status, helping clinicians determine if the anticoagulation therapy is effective. |

| Assessment of liver disease and synthetic function | The PT can also reflect the synthetic function of the liver, as the liver produces several clotting factors. Prolonged PT may indicate liver dysfunction. |

| Diagnosing disseminated intravascular coagulation (DIC) | An elevated PT/INR can serve as a diagnostic indicator for DIC, a serious condition characterized by widespread clotting and subsequent bleeding. |

| ABBREVIATIONS: INR = International Normalized Ratio; PT = prothrombin time | |

Factors That Prolong the PT/INR

Several factors can influence PT/INR, complicating the monitoring of patients on warfarin therapy4,5,9:

- Warfarin: The primary medication affecting PT/INR, warfarin requires careful monitoring to ensure that patients remain within the therapeutic range.

- Other anticoagulant drugs: Direct oral anticoagulants (DOACs) and argatroban can impact PT/INR readings. For instance, transitioning a patient from argatroban to warfarin requires caution, as argatroban significantly increases INR measurements, potentially leading to misleading results.

- Heparin and low molecular weight heparins (LMWH): Although traditional references indicate that these medications affect PT measurements, modern reagents, and laboratory techniques typically correct for this interference, making it less of a clinical concern.

- Liver disease: The PT is a reliable indicator of both anticoagulation status and liver function. Patients with severe liver disease often present with elevated PT values due to impaired clotting factor synthesis.

- Vitamin K deficiency: Poor dietary intake or nutritional issues can lead to vitamin K deficiency, resulting in elevated PT levels.

- Coagulation factor deficiencies: Genetic disorders that reduce the production of specific coagulation factors can also manifest as elevated PT, even if they are not related to liver disease.

- Antiphospholipid antibodies: The presence of these antibodies can influence PT measurements, adding another layer of complexity in monitoring anticoagulation.

It is crucial to consider these factors when monitoring patients on warfarin therapy, as they can significantly affect PT/INR results beyond the effects of the medication itself.

Understanding Warfarin Monitoring and Its Mechanism

Warfarin inhibits the production of vitamin K-dependent coagulation factors, specifically Factors II, VII, IX, and X, along with proteins C and S. The INR is particularly effective for monitoring warfarin therapy because it directly measures Factors II, VII, and X.10

Anticoagulation does not occur immediately upon achieving adequate warfarin serum levels. Instead, anticoagulation begins when the serum levels of affected coagulation factors decrease. Warfarin reduces the production of these factors rather than directly affecting them. The half-lives of these factors vary significantly11,12:

- Factor VII has a short half-life of approximately three to six hours, causing it to decrease quickly.

- Factor II (prothrombin) has a long half-life of about 24 to 48 hours (and up to 60 hours), making it the most significant factor in determining the time it takes for warfarin to become effective.

This long half-life is why it takes a couple of days for warfarin to exert its full anticoagulant effect; the therapy is essentially waiting for Factor II levels to decrease adequately.

Monitoring Warfarin Therapy: When and How Often?

The frequency of monitoring may vary depending on whether the patient is treated in an inpatient or outpatient setting. In 2018, The Joint Commission published National Patient Safety Goals for anticoagulant therapy, highlighted below.13

Baseline PT/INR Testing

Before initiating warfarin therapy, obtaining a baseline PT/INR level is crucial. The Joint Commission mandates this step to ensure no underlying deficiencies in coagulation factors could affect future monitoring.13 Clinicians can order baseline testing on the day therapy starts or use a previous test, if the results are relevant. For stable patients, a result from a month or two ago may suffice. However, if the patient has fluctuating liver function or other issues, the baseline should be determined from a more recent test.13

Monitoring Schedule After Initiating Warfarin

After establishing the baseline, the timing for subsequent INR testing is essential. Testing the INR the day after the first dose of warfarin may not provide significant insights since coagulation factors usually take at least two days to decrease.11,13 In rare cases, if a higher-than-expected dose is administered, especially in elderly patients with comorbidities and drug interactions, a noticeable increase in INR might occur within the first day or two. However, routine daily testing is typically unnecessary.

Generally, clinicians don't see significant changes until day three after starting therapy.12 Warfarin’s full effects are usually evident within five to seven days.12

For stable patients, there’s some debate regarding the frequency of testing. Typically, unstable patients may need testing every few days to once a week.12 Clinicians can monitor stable patients less frequently, often every four weeks or, in some cases, even every 12 weeks. Other modules will discuss lengthening the testing interval.12

PAUSE AND PONDER: What alternative methods can we use to monitor warfarin therapy without relying on PT/INR?

The alternative method for monitoring warfarin therapy in patients with antiphospholipid antibody syndrome is to measure Factor II or Factor X activity levels.14 (In other patients, measuring these indices may confirm pharmcodynamic issues.) While the PT/INR tests provide a broad assessment of coagulation factors affected by warfarin, monitoring just one of these factors can be sufficient.14 Ordering a factor level test is typically straightforward, similar to any other blood test. However, it’s important for clinicians to ensure that the lab understands that a Factor X activity level, not an anti-Factor Xa level, is needed. These are different tests altogether.

Factor activity levels are reported as a percentage of normal, indicating how much the factor has decreased compared to a healthy individual. For instance, a Factor X level between 24% and 45% of normal correlates with an INR of 2-3.15-17 This approach allows continuous monitoring of these patients without relying on INR, which may be unreliable due to the interference caused by their condition. The module on challenging topics covers this subject in more depth.18

Activated Partial Thromboplastin Time

The aPTT, or simply PTT, is a test that measures the time it takes for plasma to clot after exposure to a reagent, but importantly, it does not use tissue factor. The aPTT specifically assesses the intrinsic pathway down to the common pathway.4,5

Normal Ranges and Variability

The normal range for aPTT is highly dependent on the laboratory equipment and reagents used, similar to PT testing. However, unlike PT, there is no standardization mechanism like the INR to correct aPTT results. Each laboratory, along with its reagents and testing equipment, will have its own normal values, generally falling between 25 to 35 seconds.19

Additionally, the behavior of aPTT results can vary significantly; for example, as heparin is administered, the aPTT will increase over time.19 This response can differ between labs and within the same lab if they change reagent companies or reagent lot numbers. Therefore, continuous testing and calibration are essential to ensure accuracy in aPTT results.

Establishing aPTT Therapeutic Range for Heparin Monitoring

Given the variability in aPTT testing, it is crucial to ensure that the therapeutic range for heparin is accurately established based on the specific reagents and tests being used. Each laboratory must perform a correlation whenever it introduces new equipment or switches reagent lot numbers.

Guidelines for Establishing PTT Range

Laboratories must adapt the PTT range based on the responsiveness of the reagent and the coagulometer in use.20,21 The recommended approach is as follows:

- Select a therapeutic PTT range: This range should correlate with a heparin level of 0.3 to 0.7 units.

- Sample collection: Collect blood samples from patients receiving heparin therapy.

- Testing with anti-Factor Xa: Measure the heparin levels in the blood using an anti-Factor Xa test.

- Regression analysis: Conduct a regression analysis to determine the appropriate PTT range that corresponds to the established therapeutic range for your heparin protocol.

This process ensures that the PTT monitoring accurately reflects heparin’s therapeutic effect, thereby enhancing patient safety and treatment efficacy.

Interpreting Regression Analysis for Heparin Monitoring

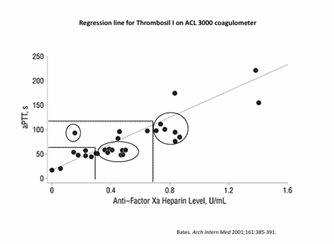

Figure 1. Regression Analysis for Heparin Monitoring22

Figure 1 illustrates a regression analysis used to establish the therapeutic range for heparin monitoring. In this analysis, researchers evaluated a cohort of patients to determine their PTT values in relation to their anti-Factor Xa heparin levels. By plotting this data on a graph and drawing reference lines, the laboratory defined the appropriate PTT therapeutic range for its heparin protocols. In this cohort, the therapeutic PTT range appeared to be approximately 60 to 120 seconds, represented by the horizontal lines that correspond to heparin levels of 0.3 to 0.7 units.22

However, as highlighted in the circled areas, clusters of patients experienced significant variability 22:

- Supra-therapeutic patients: The group in the upper right corner appeared to have PTT values within the therapeutic range but were actually supra-therapeutic with respect to their heparin levels.

- Sub-therapeutic patients: Conversely, the circled group in the middle had PTT values indicating they were slightly sub-therapeutic, even though their actual heparin levels were adequate.

This variability can lead to unnecessary dose adjustments, as some patients may be treated for perceived under-dosing although their anticoagulation therapy is effective.

The take-home message is clear: interpatient variability and the differences in testing methods necessitate continuous adjustments to the therapeutic range for heparin protocols. Each time a laboratory receives a new reagent lot or changes equipment it must re-evaluate and adjust the therapeutic range accordingly to ensure optimal patient care.22

Clinical Uses of aPTT

Table 3 demonstrates how the aPTT test serves several important clinical functions.

| Table 3. Clinical Uses of the aPTT4,5 | |

| Indication | Clinical Pearls |

| Monitoring heparin therapy | Therapeutic ranges are typically established at 1.5 to 2.5 times the control value of the PTT. However, the most accurate method for determining this range is through regression analysis, as recommended by the CHEST guidelines and other major medical organizations. |

| Monitoring direct thrombin inhibitors (DTIs) | aPTT can also be used to monitor patients receiving injectable DTIs (i.e., argatroban) |

| General assessment of anticoagulation status | aPTT serves as a general screening tool to assess the overall state of the coagulation system. |

| Diagnosing DIC | |

| ABBREVIATIONS: aPTT = activated partial thromboplastin time; DIC = disseminated intravascular coagulation; DTI = direct thrombin inhibitors | |

Factors That Prolong aPTT

Several factors can lead to an elevated aPTT23:

- Heparin and DTIs are well-known for prolonging the aPTT due to their mechanisms of action within the coagulation cascade.

- DOACs can prolong the aPTT, but this test is not reliable for monitoring these agents.

- Liver disease can serve as a general marker for coagulation status and may prolong the aPTT.

- Specific factor deficiencies, such as hemophilia A or B, where specific coagulation factors are deficient, will result in prolonged aPTT.

- Antiphospholipid antibodies can interfere with coagulation testing, leading to aPTT prolongation. Although the impact on aPTT is not as significant as on INR, patients with antiphospholipid syndrome may still experience coagulation issues.

Understanding these factors is crucial for interpreting aPTT results accurately and making informed decisions regarding patient management.

THROMBIN TIME OVERVIEW

Thrombin time (TT) is a laboratory test that measures the time it takes for fibrinogen to convert to fibrin,24 marking the final step in the coagulation cascade. Unlike other coagulation tests, TT specifically focuses on this critical transition, which results in clot formation. The normal range for TT typically falls between 14 to 19 seconds. However, this can vary depending on the specific laboratory equipment and reagents used. As with the other tests, the TT is highly dependent on the specific methods and instruments employed in the laboratory.24

Clinical Uses of TT

TT is not commonly used as a broad screening tool due to its specificity to the final step of coagulation. However, Table 4 lists the clinical situations in which it is invaluable.

| Table 4. Situations in which TT is Invaluable24 | |

| Situation | Notes |

| Evaluation of fibrinogen disorders

|

Abnormalities in the conversion of fibrinogen to fibrin this process can indicate issues with fibrinogen production or functionality. Abnormal TT results may indicate disorders that affect fibrinogen levels or structure. |

| Detection of heparin | If there is uncertainty about whether a plasma sample has been contaminated with heparin, TT can definitively confirm its presence. This is especially useful in situations where other tests may be inconclusive. |

| Diagnosis of DIC

|

In cases of DIC involving excessive clotting and subsequent bleeding, abnormalities in fibrinogen levels and conversion can be detected through prolonged TT. |

| ABBREVIATIONS: DIC = disseminated intravascular coagulation; TT = thrombin time | |

Although TT is a valuable test for specific conditions, it is not typically used as a general screening test for coagulation disorders.24 Since it measures only the final step of the coagulation process, it does not provide comprehensive insight into earlier steps in the cascade. Therefore, its use is generally reserved for diagnosing issues directly related to fibrinogen conversion, such as inherited fibrinogen disorders, heparin contamination, or DIC.24

ACTIVATED WHOLE BLOOD CLOTTING TIME

The activated whole blood clotting time (ACT) is a laboratory test used to assess the time it takes for whole blood to clot when exposed to a reagent that activates the intrinsic pathway of coagulation.25 Unlike other coagulation tests that use plasma samples, ACT measures the clotting time of whole blood, making it unique in this regard. The normal range for ACT typically falls between 70 and 120 seconds, although the time can vary depending on the specific laboratory equipment, reagents, and protocols used. As with other coagulation tests, the normal range is highly dependent on the testing environment.25

Clinical Uses of ACT

ACT is primarily used in clinical settings where large doses of heparin are administered, such as during procedures in a cardiac catheterization lab (Cath Lab) or during cardiopulmonary bypass. Table 5 explains these uses in more detail. It is a quicker, more reliable alternative to other coagulation tests, particularly when measuring the effects of high doses of heparin.

| Table 5. Clinical Uses for the Activated Whole Blood Clotting Time25 | |

| Monitoring heparin during large doses | Heparin is commonly used to prevent clotting during invasive procedures, and in the Cath Lab. Regular heparin infusions usually involve a bolus dose of around 80 units/kg. However, in the Cath Lab, the heparin dose can range from 300 to 400 units/kg. Under these conditions, other coagulation tests, such as the PTT, would become excessively prolonged and would be unreliable. |

| Bedside use in cardiac procedures | One advantage of ACT is quick bedside results for cardiac procedures that require real-time monitoring of anticoagulation. Healthcare providers can adjust heparin administration if needed during critical procedures. |

| Cardiopulmonary bypass (i.e., open-heart surgery) | Heparin is used to prevent clotting in the bypass machine. In these cases, the therapeutic range for ACT is generally above 480 seconds to ensure proper anticoagulation. For less invasive procedures like cardiac catheterization, the target range is typically 300 to 350 seconds. |

| ABBREVIATIONS: ACT = activated whole blood clotting time | |

While ACT is an essential test in certain high-risk procedures, it is not routinely used for general anticoagulation monitoring.25 It is most valuable in settings where high doses of heparin are required, and where quick, real-time results are crucial. Additionally, the normal therapeutic ranges for ACT vary based on the specific procedure being conducted, and so results must be interpreted within the context of the clinical setting.25

PRACTICE PATIENT CASE

A 41-year-old pregnant woman carrying twins is admitted for a DVT. She begins treatment with a heparin drip at 80 units/kg bolus, followed by an 18 units/kg/hour infusion. Over time, her infusion rate is adjusted to approximately 2000 units/hour, which equates to 25.7 units/kg/hour, a relatively high heparin dose. Despite seeming to be in a therapeutic range, she develops gross hematuria, and her hemoglobin drops from 10.4 to 7.9 over four days. Given these complications, the medical team is concerned about why she requires such a large heparin infusion and whether the rate should be reduced.

Key Questions to Ask

- Is the patient's weight correct? The first thing to check is whether the patient’s weight was accurately recorded on the scale and entered into the infusion pump. This ensures the proper amount of heparin is being delivered based on her body mass. If the weight was incorrect, it could explain why she is receiving an excessive amount of heparin.

- Was the infusion rate correctly calculated? It's crucial to verify that the infusion rate calculation was accurate. Errors in dosage calculations can lead to the patient receiving more heparin than intended, potentially contributing to bleeding complications like the one seen in this case.

- Is the patient’s lab work accurate and correctly matched? Mislabeling or mixing up lab work can lead to incorrect patient data being used, which could explain why the infusion rate appears to be appropriate when it is actually too high. Checking that the lab results are from the correct patient is essential.

Testing for Heparin Levels

If the above factors are ruled out and the patient's weight, infusion calculations, and lab results all appear correct, testing the anti-factor Xa level is the next logical step. This test measures the level of heparin activity in the blood and provides a more reliable measure of anticoagulation than other tests like the PTT, which can be influenced by various patient-specific factors.

- Anti-factor Xa level: This test would reveal the true extent of heparin activity in the bloodstream. The patient's remarkably elevated anti-Xa levels indicate that she has a high amount of heparin in her system, far beyond what would be expected based on the current infusion rate. This suggests heparin resistance.

Management and Resolution

Upon discovering the elevated anti-Xa levels, the infusion rate was adjusted down to 1500 units/hour, which is closer to typical dosing for patients of this clinical presentation. Following this adjustment, the patient's symptoms resolved, and she was no longer experiencing the bleeding complications associated with excessive heparin.

This case illustrates the importance of monitoring heparin therapy and being aware of heparin resistance. Even though the patient was receiving what seemed to be a therapeutic dose of heparin, the excessive infusion led to bleeding complications. By testing anti-factor Xa levels, the underlying issue was identified and treated. This highlights the need for accurate dosing calculations, laboratory testing to monitor heparin levels, and consideration of heparin resistance in patients who require unusually high doses of the drug to achieve anticoagulation.

HEPARIN RESISTANCE AND APPARENT HEPARIN RESISTANCE

Heparin resistance can be categorized into two types: true resistance and apparent resistance.20 Understanding the differences between these is crucial for diagnosing and managing patients who require unusually high doses of heparin, as was the case with the 41-year-old pregnant patient.

True Heparin Resistance

In true heparin resistance, the patient does not respond to heparin despite receiving therapeutic or even higher-than-expected doses.26 This can happen due to several pharmacokinetic or pharmacodynamic factors, including26

- Increased heparin clearance: The patient's body may metabolize heparin faster than expected, requiring higher doses to maintain therapeutic anticoagulation.

- Increased heparin-binding proteins: Some patients have elevated levels of plasma proteins that bind to heparin, effectively reducing the amount of free, active heparin available to exert its anticoagulant effect.

- Altered volume of distribution: Changes in the patient's body composition, such as a higher volume of distribution, can affect how heparin is distributed throughout the body, requiring higher doses to achieve the desired effect.

These factors contribute to the need for much higher doses of heparin to achieve the expected anticoagulant effect, making it a true form of resistance to the drug.

Apparent Heparin Resistance

In contrast, apparent heparin resistance refers to a situation where the patient appears to require more heparin than expected, but the issue is not due to pharmacokinetic resistance.26 In the case of apparent heparin resistance, the PTT does not accurately reflect the patient's anticoagulant status. For example, elevated levels of coagulation factors like Factor VIII can lead to a falsely short PTT, suggesting that the patient is adequately anticoagulated when, in reality, they may require higher doses of heparin to achieve the correct therapeutic effect. This condition can make it difficult to assess the patient's true anticoagulation status, which can lead to the overuse of heparin and the risk of bleeding complications.26

ANTI-FACTOR Xa TEST

The anti-factor Xa test is an important when using drugs like heparin, enoxaparin, and dalteparin. Unlike clotting tests like the PTT, the anti-factor Xa test does not measure clotting time. It is a functional test that quantifies the enzymatic activity involved in coagulation. The test is often referred to as chromogenic anti-factor Xa because it involves a color change as a result of the chemical reaction between the reagent and the substance being tested.4,5

The chromogenic test measures the activity level of Factor Xa, which is essential for blood clotting. In the presence of a specific anticoagulant (such as heparin), the test measures the inhibition of Factor Xa activity. 4,5 The results are reported in units/mL (e.g., units of heparin per mL), which indicates the concentration of the anticoagulant drug in the patient’s blood. The normal range for this test is 0, meaning if there's no drug present, there’s no activity detected, and the test result is zero.4,5

Clinical Uses of Anti-Factor Xa Testing

A primary uses of anti-factor Xa is to monitor heparin therapy, especially in institutions where heparin infusions are used for anticoagulation.20 This test has become more commonly used than PTT for monitoring heparin therapy due to its simplicity and lack of interference from factors like factor XIII levels or warfarin. Anti-factor Xa can also be used to monitor LMWHs (e.g., enoxaparin and dalteparin) and fondaparinux, although the calibration curves for these drugs are different. Each drug may have its own specific calibration and the lab will need to adjust accordingly. Some oral anticoagulants, such as direct factor Xa inhibitors (e.g., apixaban, rivaroxaban), can affect coagulation assays. To quantify their activity rapidly and accurately, anti-factor Xa testing must be calibrated specifically to the DOAC being assessed.27

Advantages of anti-factor Xa for monitoring heparin include28

- Less interference: Unlike the PTT test, which can be influenced by many factors (e.g., factor XIII levels, warfarin therapy), the anti-factor Xa test is more specific and free from interference, providing a more reliable result.

- No calibration issues: Since anti-factor Xa directly measures drug concentration, there’s no need for recalibration each time new reagent lots are used, unlike with PTT testing. The therapeutic range for heparin is consistently set at 0.3 to 0.7 units per mL, which simplifies monitoring.

- Improved accuracy: The anti-factor Xa test provides a more accurate assessment of heparin levels, reducing the chance of over- or under-dosing, which can lead to complications like bleeding or thrombosis. This accuracy reduces the frequency of dosage adjustments and the associated risk of error.

- Less frequent retesting: Because there’s less need for frequent adjustments in dosing, patients may require fewer tests, which reduces laboratory workload and improves overall patient care.

Anti-factor Xa Testing for LMWHs and Fondaparinux

The use of anti-factor Xa testing for LMWHs like enoxaparin and dalteparin, and fondaparinux, has been an area of ongoing discussion. While it is not universally required for all patients on these medications, testing can provide valuable information and improve patient safety in specific situations.29

In patients who are extremely obese or very small (i.e., children), the pharmacokinetics of LMWHs can be unpredictable.30 These patients may have altered drug clearance or distribution, meaning they may handle the drug differently than expected based on weight alone. In these cases, testing can ensure that the drug is working effectively and that the patient is within a therapeutic range.29

Renal dysfunction is another consideration.29 Patients with impaired renal function may not clear LMWH or fondaparinux as efficiently, leading to drug accumulation and potentially dangerous levels in the bloodstream. Testing anti-factor Xa levels in these patients helps assess if their drug concentrations are within a safe therapeutic window.29

Pregnancy introduces significant pharmacokinetic changes that can affect how LMWH are metabolized.29 These changes are especially pronounced in the third trimester, as women experience substantial physiological shifts, including weight gain, blood volume changes, and increased renal clearance. Regular anti-factor Xa testing during pregnancy, especially in the third trimester, ensures that dosing adjustments are appropriate to maintain effective venous thrombosis prevention without putting the patient at risk of bleeding.29

Prior to surgery, particularly when patients have been on LMWH or fondaparinux, there is a concern about the drug's clearance from the system.32 While guidelines generally recommend holding the dose for about 24 hours before surgery, anti-factor Xa testing can be particularly useful in emergent situations or when there is uncertainty about the drug's clearance. In these cases, testing can provide additional reassurance that the anticoagulant effect has sufficiently diminished before surgical intervention.

Anti-factor Xa Testing for Direct Factor Xa Inhibitors

Routine monitoring of DOAC concentrations is not necessary in standard clinical practice due to their predictable pharmacokinetics and wide therapeutic windows. However, in emergent situations, such as active bleeding, urgent surgical intervention, suspected overdose, or impaired renal function, assessment of anticoagulant activity may be warranted to guide clinical decision-making and ensure patient safety.

In these cases, chromogenic anti-factor Xa assays can be used to measure the anticoagulant effect of factor Xa inhibitors (e.g., apixaban, rivaroxaban, edoxaban). These assays are available in many hospital and reference laboratory settings and can provide relatively rapid and quantitative estimations of drug levels, provided they are calibrated specifically to the DOAC in question. While not universally available, when accessible and properly calibrated, they serve as a valuable tool for managing complex or high-risk situations involving DOAC therapy.32

COAGULATION FACTOR ACTIVITY TESTING

Coagulation factor activity testing is a straightforward, valuable method for evaluating various factors involved in the coagulation cascade.4,5 This test is especially useful in diagnosing factor deficiencies or other coagulation abnormalities. The most common reason for performing coagulation factor activity testing is to diagnose hereditary bleeding disorders caused by deficiencies in one or more coagulation factors. These include hemophilia A (a Factor VIII deficiency) and hemophilia B (a Factor IX deficiency). This testing may identify other rarer factor deficiencies that could result in bleeding problems.4,5

While INR is most commonly used for warfarin monitoring, coagulation factor activity testing can be employed as an alternative method. This might be necessary in cases where INR results are unreliable or not available, or when more specific information about the patient’s coagulation status is needed.

D-DIMER TEST

D-dimer is a protein fragment produced when plasmin breaks down fibrin, a major component of blood clots.33 This process occurs during fibrinolysis. In simple terms, when a blood clot forms and then begins to dissolve, D-dimer is released into the bloodstream. The normal range for D-dimer levels can vary depending on the method of testing (whole-blood agglutination assays, ELISA [enzyme-linked immunosorbent assay], or latex immunoagglutination method) and each test manufacturer establishes the normal range and different assay manufacturers use different units (e.g., ng/mL, mcg/mL). Laboratories frequently report the results in units other than those recommended by the assay manufacturer.24,34

D-dimer testing is most commonly used as a screening tool to help rule out the presence of active thrombotic activity, such as a DVT or a pulmonary embolism (PE).33 For example, clinicians may order a D-dimer test for a patient presenting with calf pain in the emergency department. If the test comes back with normal D-dimer levels, it essentially rules out an active DVT or PE without needing further, more invasive testing like ultrasound.33

In patients who have experienced a previous thrombotic event (e.g., DVT or PE), D-dimer testing can help determine whether ongoing anticoagulation therapy is needed. Elevated D-dimer levels approximately one month after the acute event might suggest an increased risk of recurrence, and the patient may need to continue anticoagulation therapy for a longer period.33-36 Conversely, normal D-dimer levels can suggest the risk of recurrence is low, and the patient may be safely transitioned off anticoagulation therapy, depending on other clinical factors.35,36

PRACTICE PATIENT CASE

Joyce has been on warfarin and has maintained a therapeutic INR range for the past six weeks. She comes into your outpatient anticoagulation clinic, and reports no changes to her diet, exercise, or overall health. Her POC test result on your clinic’s machine shows an INR of 4.7. Following clinic policy, you send Joyce to the lab for venipuncture to confirm this critical value. After a couple of hours, the lab result comes back with an INR of 3.2. Now, you're in a bit of a dilemma. You inform Joyce of the new lab result, and she asks why there's a discrepancy, what test she should trust, and why the POC result isn’t aligning.

What to Communicate with the Patient

Despite advances, we have been unable to fully standardize INR testing across different methods. The fact that two testing methods yield different results does not automatically mean that one is correct and the other is incorrect; both could be slightly inaccurate. In medical testing, there are distinctions between accuracy, reliability, and repeatability, all of which contribute to overall test validity. Repeatability—how consistently a test produces the same result—is an important factor in assessing reliability.

The medical community and laboratory testing guidelines generally accept that a difference of 0.5 INR units between two different testing methods is reasonable. However, in clinical practice, a 0.5 INR difference can be significant.37 When managing warfarin therapy, such a discrepancy might mean the difference between adjusting a patient’s dose or keeping it the same, which adds to the complexity of interpreting INR results.

Ultimately, when discussing test discrepancies with patients, it's essential to reassure them that variability is expected and that INR management is based on trends rather than single data points. Clinical judgment, alongside repeat testing when necessary, helps ensure that anticoagulation therapy remains both safe and effective.

POINT OF CARE TESTING

Point of care testing (POCT) refers to the use of small, often handheld devices to perform diagnostic tests outside of a traditional laboratory. One of its earliest applications was INR testing, allowing INR measurements to be performed at the bedside or in outpatient settings.

POCT’s advantages include38

- Rapid turnaround time: INR results, for example, can be obtained in about a minute, whereas lab testing takes significantly longer.

- Home testing capability: Patients can monitor their INR levels at home, improving convenience and adherence.

- Ease of use: POCT devices are designed for simple operation, making them accessible for both healthcare providers and patients.

POCT also has some disadvantages.39 POCT devices tend to be expensive, both in terms of initial purchase and per-test cost compared to traditional lab testing. In addition, each device has specific testing guidelines. These may include temperature sensitivity, hematocrit limitations, and other operational constraints. However, for the majority of patients, these devices function effectively without issues.39

Understanding INR Result Discrepancies

Result variability issue has long been recognized, which is why the WHO introduced the INR system to standardize results across different testing methods. However, even with standardization, variation still exists because different thromboplastin reagents are used in different testing systems.

One key limitation is that thromboplastins are only standardized for their International Sensitivity Index (ISI) up to an INR of approximately 4.0—4.5. Beyond this range, variability between testing methods increases significantly. This means that INR values above 4.5—whether measured by a POC device or a lab test—become less precise.40 For example, all healthcare clinicians should interpret an INR of 5.0, 6.0, or 7.0 with caution, as these values are more of an estimation than an exact measurement.

Device Correlation Testing

When comparing INR results from different testing methods, we essentially perform correlation testing. This process assesses how well two testing methods align by analyzing multiple samples side by side and plotting the results on a correlation graph. Studies commonly use this approach, where investigators compare two or more devices by testing the same samples simultaneously and then measuring how closely the results match. A strong correlation, often above 90%, suggests that the methods are generally in agreement. However, these studies rarely explain the inconsistencies visible in the data.

Correlation testing does not determine whether one method is "right" or "wrong"; it simply tells us how similar or different the results are between two methods. There is no true gold standard for INR measurement. No single test can definitively provide the "correct" INR. Many assume that the laboratory venipuncture method is the gold standard simply because it has been used the longest and is more complex. Recent studies have generally found that both methods tend to be accurate provided the testing devices are well-maintained and calibrated.41-43

Pre-analytical errors in INR testing occur before the test is even run, affecting both POC and venipuncture testing methods. Pre-analytical errors can introduce variability into the results, making it harder to interpret the patient’s true anticoagulation status. Table 6 explains these errors in more detail.

| Table 6. Pre-Analytical Errors in INR Testing44 | |

| POC Error

Squeezing finger too hard Too much time between lancing and applying blood to the test strip Improper storage of test strip |

Venipuncture Error

Under/over-filling the collecting tube Low HGB/HCT Device not calibrated appropriately |

| ABBREVIATIONS: HCG/HCT = hemoglobin/hematocrit; INR = International Normalized Ratio; POC = point of care | |

Understanding pre-analytical errors highlights how both POC and venipuncture tests are susceptible to variability—even before testing begins. Recognizing these potential issues reinforces the need to interpret INR results cautiously, especially when discrepancies arise between testing methods. Proper sample collection techniques, equipment calibration, and awareness of external factors all play vital roles in producing accurate and reliable test results.

Evidence Supporting the Accuracy of POC INR Testing

How do we know that POC INR devices are reliable? The answer lies in extensive clinical evidence. Numerous large-scale clinical trials have demonstrated that these devices provide accurate and effective INR monitoring, leading to positive patient outcomes. In fact, some of the most significant trials in anticoagulation therapy—such as ARISTOTLE (for apixaban)45 and ROCKET AF (for rivaroxaban)30,31—used POC devices to monitor patients taking warfarin. These studies achieved excellent results, showing effective stroke prevention with low bleeding risks, reinforcing confidence in the reliability of POC INR testing.

Key Takeaways

POC devices are clinically validated. They have been used in major anticoagulation trials and have consistently produced reliable results. When clinicians receive an unexpected out-of-range INR, they must consider all possible factors:

- Could a pre-analytical error be affecting the test?

- Did the patient take an over-the-counter medication that interacted with warfarin?

- Is there an adherence issue that the patient forgot to mention?

Single abnormal results are no reason to panic. If the result seems inconsistent with the patient’s history and condition, a simple retest may be the best approach rather than immediately adjusting therapy.

ORAL ANTICOAGULATION

DOACs do not require routine monitoring due to their predictable pharmacokinetics and pharmacodynamics. However, laboratory testing can be useful in urgent situations to determine if the drug is still in the system. Table 7 lists the standard laboratory tests that can be used to monitor these anticoagulation agents.

| Table 7. Laboratory Testing for DOACs46 | ||

| Coagulometric Method(s) | Chromogenic Method(s) | |

| Dabigatran | DTT, ECT | ECA, Anti-FIIa |

| Apixaban | – | Anti-FXa calibrated with apixaban |

| Rivaroxaban | – | Anti-FXa calibrated with rivaroxaban |

| Edoxaban | – | Anti-FXa calibrated with edoxaban |

| ABBREVIATIONS: DOACs = direct oral anticoagulants; DTT: dilute thrombin time; ECT: ecarin clotting time; ECA: ecarin chromogenic assay | ||

Liquid Chromatography-Mass Spectrometry

Liquid chromatography-mass spectrometry (LC-MS) is considered the gold standard for measuring direct oral anticoagulants (DOACs) due to its high specificity, sensitivity, selectivity, and reproducibility.27 However, its use is limited in routine practice because of the complexity and labor-intensive nature of the method. LC-MS requires expensive equipment and highly trained personnel to perform the test correctly. As a result, while not routinely used in clinical practice, LC-MS serves as the reference method against which other testing methods are compared to assess their accuracy.27

Laboratory Testing for Dabigatran

Dilute Thrombin Time

Dilute thrombin time (DTT) is a coagulometric method specifically designed to measure the concentration of dabigatran.47 It is a modification of the standard TT, which is extremely sensitive to dabigatran and often becomes excessively prolonged. By using a diluted thrombin reagent, DTT establishes a linear relationship between dabigatran concentration and clotting time, allowing for more accurate and quantitative assessment of the drug's anticoagulant activity. LC-MS is extensively used for research and clinical trials, but it cannot be used in routine conditions for rapid testing in laboratories.

Ecarin Clotting Time

The Ecarin Clotting Time (ECT) is a highly sensitive qualitative test where clotting time is directly related to dabigatran concentrations.48 In this assay, ecarin, a metalloprotease enzyme derived from the venom of Echis carinatus (the saw-scaled viper), is added to the plasma sample. Ecarin converts prothrombin to meizothrombin, an intermediate that promotes clotting. In the presence of dabigatran, however, the conversion of prothrombin is inhibited, resulting in a prolonged clotting time.48

The ECT has a linear dose-response relationship and can be used to measure dabigatran levels within the therapeutic range. Due to its simplicity, the ECT can be automated for use with modern coagulation analyzers, although performance verification is required for accuracy.48

While the ECT is primarily a research tool with limited clinical availability in the United States, the development of commercial kits could potentially improve its practicality. However, these kits have not been fully standardized or validated for dabigatran, and thus their use may be problematic.48

It is important to note that low prothrombin levels or hypofibrinogenemia can lead to falsely elevated clotting times that are disproportionate to dabigatran or other DTI concentrations. For these reasons, the ECT is not recommended for emergency monitoring of anticoagulant effects, despite its inclusion in some prescribing information.

Ecarin Chromogenic Assay

The Ecarin Chromogenic Assay (ECA) is a quantitative coagulometric test specifically designed to assess the concentration of dabigatran, a DTI.48 Unlike some other coagulation tests, the ECA is not influenced by levels of prothrombin or fibrinogen, which can cause inaccurate results in other assays. This makes the ECA particularly useful in clinical situations where these factors may be variable, such as in patients with coagulopathies or low fibrinogen levels. The linear dose-response relationship between dabigatran concentration and clotting time allows the ECA to provide precise, quantitative measurement across the therapeutic range.48

Although the ECA is a reliable method for assessing dabigatran activity, it is not widely available due to its complexity and the need for specialized equipment.48 The assay requires appropriate calibration and performance verification to ensure accuracy. While it has shown promise for emergency settings, such as in cases of bleeding or urgent surgery, its clinical use is still somewhat limited, primarily due to availability issues and the high cost of the reagents.48

Despite these limitations, the ECA remains a valuable tool for assessing the anticoagulant effect of dabigatran in specialized clinical scenarios where precise quantification of drug levels is necessary.

Anti-Factor IIa Testing for DTIs

The anti-factor IIa assay is a valuable tool for the precise quantification of the anticoagulant effect of DTIs, such as dabigatran.47 This assay works by measuring the cleavage of a thrombin substrate in the plasma. Plasma is mixed with the substrate, which is typically cleaved by thrombin to produce a measurable signal. If dabigatran is present, it inhibits thrombin activity, leading to a decrease in substrate cleavage. As a result, the extent of inhibition correlates with the dabigatran concentration. This assay is particularly useful in situations where accurate quantification of dabigatran levels is critical, such as in emergency scenarios or for monitoring patients with renal impairment.48

CONCLUSION

When it comes to clotting and anticoagulation, pharmacists need expertise. They must consider the reason for the testing, be it screening, diagnosis, or monitoring. Patient factors complicate the decision, and unexpected results need to be researched and mitigated. Laboratory results are the clues that inform the detective work of anticoagultion.

Download PDF

Post Test

View Questions for Laboratory Monitoring of Anticoagulation

1. Which of the following laboratory test measures both the extrinsic and common coagulation pathways?

A. Activated Partial Thromboplastin Time

B. activated whole blood clotting time

C. Prothombin time

2. Which of the following is a situation in which the INR would be the preferred test?

A. Diagnosing disseminated intravascular coagulation

B. Establishing a therapeutic range for heparin

C. Bedside use in cardiac procedures

3. In which situation would the anticoagulation team need to employ anti-factor Xa testing for patients using LMWH?

A. In patients with hepatic impairment

B. In post-surgical patients

C. In patients who are extremely obese or very small

4. Alejandro is a gentleman who has received acute treatment for a pulmonary embolism. He has antiphospholipid syndrome. He also has a heart block. Which of the following tests should your anticoagulation team use to monitor warfarin going forward?

A. Factor X activity level

B. Anti-Factor Xa level

C. INR/PTT

5. What do factor activity levels measure?

A. Factor activity levels are reported the laboratory’s geometric mean level, indicating how the factor compares to more than 20 normal individuals.

B. Factor activity levels are reported as a percentage of normal, indicating how much the factor has increased compared to a healthy individual.

C. Factor activity levels are reported as a percentage of normal, indicating how much the factor has decreased compared to a healthy individual.

6. A patient’s INR seems “off.” It remains elevated despite adjustments in warfarin doses. The pharmacist thinks it’s a pharmacodynamic issue. What should the anticoagulation team check?

A. INR, but use a different lab

B. Factor II and factor X activity

C. Anti-Factor Xa level

7. In which of the following situations would the Activated Whole Blood Clotting Time (ACT) be used?

A. Monthly monitoring of patients with APS

B. Bedside Use in Cardiac Procedures

C. Routine monitoring in pregnant women

8. A 34-year-old pregnant woman is admitted for a DVT. The attending orders a heparin drip at 80 units/kg bolus, followed by an 18 units/kg/hour infusion. Over time, her infusion rate is adjusted to approximately 2000 units/hour, which equates to 25.7 units/kg/hour, a relatively high heparin dose. She report to the nurse that she is “peeing blood.” A STAT hemoglobin indicates it has dropped 11.2 to 6.8 since admission. Given these complications, which of the following steps should the medical team do FIRST?

A. Ascertain that the patient is indeed pregnant and it is not twins

B. Draw the anti-factor Xa level

C. Confirm that the data was entered into the infusion pump correctly

9. Which of the following can be monitored using the anti-factor Xa test?

A. heparin, enoxaparin, and dalteparin

B. heparin, apixiban, and rivaroxiban

C. enoxaparin, dalteparin, and warfarin

10. This is a description of a device: reports test results rapidly, convenient for patients, is easy to use. What is it?

A. Laboratory-based testing

B. Point-of-care testing device

C. Correlation testing

11. What does correlations testing measure?

A. Which method is right and which method is wrong

B. The quantity of blood in the patient’s sample

C. Differences between the results from two methods

12. Jack is a patient on warfarin who works as a laborer. He uses POC testing. He has had three unexpected out-of-range INRs in the last five days. What is the MOST LIKELY reason?

A. Nonadherence

B. Pregnancy

C. OTC medication use

References

- Chaturvedi S, Brodsky RA, McCrae KR. Complement in the Pathophysiology of the Antiphospholipid Syndrome. Front Immunol. 2019;10:449. doi:10.3389/fimmu.2019.00449

- Blennerhassett R, Favaloro E, Pasalic L. Coagulation studies: achieving the right mix in a large laboratory network. Pathology. 2019;51(7):718-722.

- Dorgalaleh A, Favaloro EJ, Bahraini M, Rad F. Standardization of Prothrombin Time/International Normalized Ratio (PT/INR). Int J Lab Hematol. 2021;43(1):21-28.

- Dela Pena LE. 2013. Hematology: blood coagulation tests. In: M. Lee, editor. Basic skills in interpreting lab reports. 5th ed. Bethesda (MD): ASHP. P 373‐399.

- DeMott WR. 1994. Coagulation. In: Jacobs et al, editor. Laboratory test handbook. 3rd ed. Hudson (OH): Lexi‐Comp. P398‐480

- Riley RS, Rowe D, Fisher LM. Clinical utilization of the international normalized ratio (INR). J Clin Lab Anal. 2000;14(3):101-114. doi:10.1002/(sici)1098-2825(2000)14:3<101::aid-jcla4>3.0.co;2-a

- McRae HL, Militello L, Refaai MA. Updates in Anticoagulation Therapy Monitoring. Biomedicines. 2021;9(3):262. doi:10.3390/biomedicines9030262

- Gardiner C, Coleman R, de Maat MPM, et al. International Council for Standardization in Haematology (ICSH) laboratory guidance for the evaluation of haemostasis analyser-reagent test systems. Part 1: Instrument-specific issues and commonly used coagulation screening tests. Int J Lab Hematol. 2021;43(2):169-183. doi:10.1111/ijlh.13411

- Lau M, Huh J. Retrospective Analysis of the Effect of Argatroban and Coumadin on INR Values in Heparin Induced Thrombocytopenia. Blood. 2006;108(11):4118. https://doi.org/10.1182/blood.V108.11.4118.4118

- Ansell J, Hirsh J, Hylek E, Jacobson A, Crowther M, Palareti G. Pharmacology and management of the vitamin K antagonists: American College of Chest Physicians Evidence-Based Clinical Practice Guidelines (8th Edition). Chest. 2008;133(6 Suppl):160S-198S. doi:10.1378/chest.08-0670

- 7 Procoagulators. Transfus Med Hemother. 2009;36(6):419-436.

- COUMADIN (warfarin) prescribing information. Bristol-Myers Squibb Company. Accessed April 11, 2025. https://www.accessdata.fda.gov/drugsatfda_docs/label/2010/009218s108lbl.pdf

- The Joint Commission. R3 Report—Requorement, Rationale, Reference. National Patient Safety Goals for Anticoagulant Therapy. Accessed April 10, 2025. https://www.jointcommission.org/-/media/tjc/documents/standards/r3-reports/r3_19_anticoagulant_therapy_rev_final1.pdf?db=web&hash=7C6FA69A5DF9294B7BADF2B52C653FCA

- Masucci M, Li Kam Wa A, Shingleton E, Martin J, Mahir Z, Breen K. Point of care testing to monitor INR control in patients with antiphospholipid syndrome. EJHaem. 2022;3(3):899-902. doi:10.1002/jha2.522

- Rosborough TK, Jacobsen JM, Shepherd MF. Relationship between chromogenic factor X and international normalized ratio differs during early warfarin initiation compared with chronic warfarin administration. Blood Coagul Fibrinolysis. 2009;20(6):433-435. doi:10.1097/MBC.0b013e32832ca31f

- Moll S, Ortel TL. Monitoring warfarin therapy in patients with lupus anticoagulants. Ann Intern Med. 1997; 127:177–185.

- McGlasson DL, Romick BG, Rubal BJ. Comparison of a chromogenic factor X assay with international normalized ratio for monitoring oral anticoagulation therapy. Blood Coagul Fibrinolysis. 2008; 19:513–517.

- Moll, S AntiphospholipidAntibody Syndrome and Thrombosis. Accessed April 30, 2025. Webinar archived at http://www.standingstoneinc.com/Webinars/WebinarArchive.aspx

- What Is The Normal Range For PTT? | Essential Insights. Accessed April 11, 2025. https://wellwisp.com/what-is-the-normal-range-for-ptt/

- Hirsh J, Bauer KA, Donati MB, Gould M, Samama MM, Weitz JI. Parenteral anticoagulants: American College of Chest Physicians Evidence-Based Clinical Practice Guidelines (8th Edition) [published correction appears in Chest. 2008 Aug;134(2):473]. Chest. 2008;133(6 Suppl):141S-159S. doi:10.1378/chest.08-0689

- May JE, Siniard RC, Taylor LJ, Marques MB, Gangaraju R. From Activated Partial Thromboplastin Time to Antifactor Xa and Back Again. Am J Clin Pathol. 2022;157(3):321-327. doi:10.1093/ajcp/aqab135

- Bates SM, Weitz JI, Johnston M, Hirsh J, Ginsberg JS. Use of a fixed activated partial thromboplastin time ratio to establish a therapeutic range for unfractionated heparin. Arch Intern Med. 2001;161(3):385-391. doi:10.1001/archinte.161.3.385

- Barbosa ACN, Montalvão SAL, Barbosa KGN, et al. Prolonged APTT of unknown etiology: A systematic evaluation of causes and laboratory resource use in an outpatient hemostasis academic unit. Res Pract Thromb Haemost. 2019;3(4):749-757. Published 2019 Sep 8. doi:10.1002/rth2.12252

- Campbell S. Hemostasis. IN: Contemporary Practice in Clinical Chemistry. Academic Press, 2020. 445-467.

- Evidence-based Medicine Consult. Lab Test: Activated Clotting Time (ACT; Activated Coagulation Time). Accessed April 11, 2025. https://www.ebmconsult.com/articles/lab-test-act-activated-clotting-time

- Maier CL, Connors JM, Levy JH. Troubleshooting heparin resistance. Hematology Am Soc Hematol Educ Program. 2024;(1):186–191.

- Qiao J, Tran MH. Challenges to Laboratory Monitoring of Direct Oral Anticoagulants. Clin Appl Thromb Hemost. 2024;30:10760296241241524. doi:10.1177/10760296241241524

- Francis JL, Groce JB 3rd; Heparin Consensus Group. Challenges in variation and responsiveness of unfractionated heparin. Pharmacotherapy. 2004;24(8 Pt 2):108S-119S. doi:10.1592/phco.24.12.108s.36114

- Babin JL, Traylor KL, Witt DM. Laboratory Monitoring of Low-Molecular-Weight Heparin and Fondaparinux. Semin Thromb Hemost. 2017;43(3):261-269. doi:10.1055/s-0036-1581129

- ROCKET AF Study Investigators. Rivaroxaban-once daily, oral, direct factor Xa inhibition compared with vitamin K antagonism for prevention of stroke and Embolism Trial in Atrial Fibrillation: rationale and design of the ROCKET AF study. Am Heart J. 2010;159(3):340-347.e1. doi:10.1016/j.ahj.2009.11.025

- Raschke R, Hirsh J, Guidry JR. Suboptimal monitoring and dosing of unfractionated heparin in comparative studies with low-molecular-weight heparin. Ann Intern Med. 2003;138(9):720-723. doi:10.7326/0003-4819-138-9-200305060-00008

- Sukumar S, Cabero M, Tiu S, Fang MC, Kogan SC, Schwartz JB. Anti-factor Xa activity assays of direct-acting oral anticoagulants during clinical care: An observational study. Res Pract Thromb Haemost. 2021;5(4):e12528. doi:10.1002/rth2.12528

- Weitz, J, Fredenburgh, J, Eikelboom, J. A Test in Context: D-Dimer. JACC. 2017;70(19):2411-2420. https://doi.org/10.1016/j.jacc.2017.09.024

- Johnson ED, Schell JC, Rodgers GM. The D-dimer assay. Am J Hematol. 2019;94(7):833-839. doi:10.1002/ajh.25482

- Kearon C, Parpia S, Spencer FA, et al. D-dimer levels and recurrence in patients with unprovoked VTE and a negative qualitative D-dimer test after treatment. Thromb Res. 2016;146:119-125. doi:10.1016/j.thromres.2016.06.023

- Kearon C, Stevens SM, Julian JA. D-Dimer Testing in Patients With a First Unprovoked Venous Thromboembolism. Ann Intern Med. 2015;162(9):671. doi:10.7326/L15-5089-2

- Isert M, Miesbach W, Schüttfort G, et al. Monitoring anticoagulant therapy with vitamin K antagonists in patients with antiphospholipid syndrome. Ann Hematol. 2015;94(8):1291-1299. doi:10.1007/s00277-015-2374-3

- Raimann FJ, Lindner ML, Martin C, et al. Role of POC INR in the early stage of diagnosis of coagulopathy. Pract Lab Med. 2021;26:e00238. doi:10.1016/j.plabm.2021.e00238

- Medical Advisory Secretariat. Point-of-Care International Normalized Ratio (INR) Monitoring Devices for Patients on Long-term Oral Anticoagulation Therapy: An Evidence-Based Analysis. Ont Health Technol Assess Ser. 2009;9(12):1-114.

- Fitzmaurice DA, Geersing GJ, Armoiry X, Machin S, Kitchen S, Mackie I. ICSH guidance for INR and D-dimer testing using point of care testing in primary care. Int J Lab Hematol. 2023;45(3):276-281. doi:10.1111/ijlh.14051

- Ganapati A, Mathew J, Yadav B, et al. Comparison of Point-of-Care PT-INR by Hand-Held Device with Conventional PT-INR Testing in Anti-phospholipid Antibody Syndrome Patients on Oral Anticoagulation. Indian J Hematol Blood Transfus. 2023;39(3):450-455. doi:10.1007/s12288-022-01611-4

- Bitan J, Bajolle F, Harroche A, et al. A retrospective analysis of discordances between international normalized ratio (INR) self-testing and INR laboratory testing in a pediatric patient population. Int J Lab Hematol. 2021;43(6):1575-1584. doi:10.1111/ijlh.13652

- Munir R, Schapkaitz E, Noble L, et al. A Comprehensive Clinical Assessment of the LumiraDx International Normalized Ratio (INR) Assay for Point-of-Care Monitoring in Anticoagulation Therapy. Diagnostics (Basel). 2024;14(23):2683. Published 2024 Nov 28. doi:10.3390/diagnostics14232683

- Arine K, Rodriquez C, Sanchez K, Reliability of Point-of-Care International Normalized Ratio Measurements in Various Patient Populations. POC: J of Near-Patient Test & Tech. 2020;19(1):12-18, DOI: 10.1097/POC.0000000000000197

- Ogawa S, Shinohara Y, Kanmuri K. Safety and efficacy of the oral direct factor xa inhibitor apixaban in Japanese patients with non-valvular atrial fibrillation. -The ARISTOTLE-J study-. Circ J. 2011;75(8):1852-1859. doi:10.1253/circj.cj-10-1183

- Margetić S, Goreta SŠ, Ćelap I, Razum M. Direct oral anticoagulants (DOACs): From the laboratory point of view. Acta Pharm. 2022;72(4):459-482. doi:10.2478/acph-2022-0034

- Dunois C. Laboratory Monitoring of Direct Oral Anticoagulants (DOACs). Biomedicines. 2021;9(5):445. doi:10.3390/biomedicines9050445

- Gosselin RC, Dwyre DM, Dager WE. Measuring Dabigatran Concentrations Using a Chromogenic Ecarin Clotting Time Assay. Annals of Pharmacotherapy. 2013;47(12):1635-1640. doi:10.1177/1060028013509074

Additional Courses Available for Anticoagulation

Vitamin K Antagonist Pharmacology, Pharmacotherapy and Pharmacogenomics – 1 hour

Anticoagulation Management Pearls - 1.5 hour

Clinical Overview of Direct Oral Anticoagulants– 1.25 hour

Laboratory Monitoring of Anticoagulation – 2 hour

Heparin/Low Molecular Weight Heparin and Fondaparinux Pharmacology and Pharmacotherapy – 0.5 hours

Developing an Anticoagulation Clinic – 1.0 hour

Pharmacist Reimbursement for Anticoagulation Services – 0.5 hour

Risk Management in Anticoagulation – 1 hour

A Practical Approach to Perioperative Oral Anticoagulation Management – 2 hour

Management of Hypercoagulable States – 1.5 hour

Challenging Topics in Anticoagulation – 2 hour

Available Strategies to Reverse Anticoagulation Medications - 2 hour

Drug Interaction Cases with Anticoagulation Therapy – 1 hour